Code faster, ship ... the same?

AI tools for engineering teams are changing fast — really fast. New capabilities drop weekly, and everyone from ICs to engineering leaders to board members are scrambling to figure out what it means for productivity, measurement, and value.

As we move from simple chat interfaces toward orchestrated agent-based systems, you need to understand both the opportunities and the significant challenges ahead.

I’m bullish on AI’s potential. But I’m deeply skeptical of how most people think they’ll get value from it. We’re in a moment comparable to the mid-1990s, when Tim Berners-Lee had just invented the World Wide Web. Success was measured by visitor counters on GeoCities homepages, and some dude was just trying to sell books.

Tell me, what did you measure then?

The future is unknowable, but the transformation seems inevitable

Here’s a thought experiment: if you could write code for your entire backlog in the next 24 hours, at reasonably high quality, would you? Of course you would.

But would your organization suddenly ship dramatically more valuable features? Probably not.

That newly written code would join the pile of other code waiting to be reviewed, deployed, documented, and actually shipped to customers who may or may not want it.

Turns out, code generation was never actually your bottleneck.

And truth is, AI can only amplify what you already have. Strong foundations get stronger, and weak foundations crumble.

You need strong foundations first

Now that we’ve established code isn’t the bottleneck, let’s talk about what actually matters: results.

Your teams will get better results when they use AI to generate inspectable, testable code rather than seeking direct answers to complex questions.

Think of it this way: when AI produces code, your teams can review it, modify it, and validate it through existing QA processes. This lets AI help where it’s reliable while keeping errors in check.

But — and this is a big but — this only works if you already have robust development guardrails.

I’m talking about:

- Comprehensive automated testing (not just the happy path)

- Mature CI/CD pipelines (that actually catch issues)

- Proper code review processes (where people actually review)

- Blue/green deployments (so you can roll back quickly)

- All those other DevOps fundamentals you’ve been meaning to implement

As AI systems become more autonomous, these guardrails become even more important. AI agents will fail catastrophically without testing frameworks to catch errors or deployment safeguards to prevent problematic code from reaching production.

If you’ve got discipline around these practices, great! You can safely benefit from current AI tools and future agent orchestration. You can experiment because your existing systems will catch errors, validate functionality, and prevent serious failures.

But if you’ve consistently deprioritized these “overhead” tasks in pursuit of velocity?

You’re in for a rough ride. And honestly, that’s the part that keeps me up at night.

What AI can and can’t do

Let’s be real about what we’re dealing with: sophisticated pattern matching and text generation systems.

Yes, vendors claim these models “think” now. But “think” is doing a whole lot of heavy lifting in that sentence.

Current AI coding tools:

- Don’t really learn from your interactions

- Don’t always retain context between sessions

- Won’t always follow instructions as expected

- Lose accuracy as context grows

- Will confidently produce incorrect information

Your experienced engineers are right to worry. They’re thinking about bugs that get missed, mysterious dependencies, and code that becomes unmaintainable over time.

If you’re in a regulated industry? You’ve got a whole other set of compliance and data privacy challenges to consider.

What still matters: the same stuff as before

Despite AI’s emergence — or maybe because of it — the fundamentals of engineering effectiveness remain unchanged.

AI doesn’t require new productivity frameworks or a resurgence of per-developer productivity metrics. It reinforces why team outcomes matter more than individual activities.

Do your teams deliver value more effectively? Are cycle times improving? Are quality standards maintained?

If AI tools provide measurable benefit, these fundamental metrics should improve. If they don’t improve, the tools aren’t delivering meaningful value — regardless of how impressive the generated code looks or how much developers enjoy using them.

The economics of AI adoption

The conversation around AI investment has evolved recently, too. It’s no longer ‘Does this provide value?’ but ‘How do we manage the costs of this thing?’ as vendor pricing models evolve toward usage-based systems that are already creating significant and unpredictable expenses (ask me how I know).

These tools provide enough ergonomic benefit that your teams won’t abandon them once integrated. The developer experience improvements — faster code completion, quicker debugging, automated documentation — create baseline expectations. Try taking them away and watch the pitchforks come out.

You’ll find yourself committed to ongoing AI spending regardless of whether you can demonstrate productivity improvements. It’s like giving developers dual monitors in 2010 — technically optional, but practically mandatory.

So how do you then justify the cost?

Enterprise AI tools can cost hundreds or thousands per developer annually. Your engineers love them — and why wouldn’t they? AI helps with repetitive tasks, context switching, navigating unfamiliar codebases. The subjective value is immediate and clear. Demonstrating ROI through traditional metrics, however? Good luck with that.

But it’s important to remember that it's not just about the work that happens in the code. When justifying the total return on your AI investment, there are important factors beyond productivity:

- Talent dynamics: In competitive hiring markets, AI tools are becoming table stakes. Engineers increasingly expect them.

- Cognitive relief: Even when AI doesn’t accelerate delivery, it reduces mental load. This might prevent burnout, improve satisfaction. Happy developers are productive developers.

- Learning curves: AI tools significantly flatten onboarding for new technologies and codebases. This efficiency won’t show up in your metrics but creates organizational resilience.

- Creative capacity: When engineers spend less mental energy on boilerplate, they have more for architectural improvements and innovation.

- Future-proofing: AI tools may not deliver significant gains today, but they may someday soon. It’s better to have a team well-versed in AI-assisted development than scrambling to catch up.

And also, the equally important strategic risks:

- Dependency creation: As teams integrate AI tools, removing them becomes increasingly disruptive. Vendors know this. Prepare for price increases.

- Cost unpredictability: Usage-based pricing can explode. I’ve seen bills triple in a quarter. Budget accordingly.

- Skill atrophy: Over-reliance on AI assistance can diminish fundamental programming skills. You still need engineers who can think, not just prompt.

Think of your AI investments as a sort of portfolio approach — some AI investments for immediate developer experience, others targeting specific bottlenecks, and others exploring future capabilities. Some investments are more or less risky than others, and your portfolio strategy should account for that.

Organizational approaches that actually work

Given these realities - that AI amplifies what you already have, that you’ll pay for ergonomics regardless of productivity gains, and that focusing on the wrong metrics will lead you astray - what should you actually do?

- Find your actual bottlenecks: Map your entire delivery pipeline. Where does work actually get stuck? Code review? Testing? Deployment? Those three-week “quick syncs” with product?

- Think in systems: Optimizing something that isn’t your bottleneck won’t improve overall performance.

- Shore up the fundamentals: Automated testing, CI/CD pipelines, code review processes, deployment safeguards. These aren’t nice-to-haves anymore. They’re table stakes when you’re working with AI-generated code. (Or any code, honestly.)

- Measure what mattered before: Cycle time, deployment frequency, change failure rate, investment balance, developer experience. Not “percentage of AI-generated code per engineer” or whatever metric a vendor is pushing.

- Create safe spaces to experiment: Let teams try different approaches. Don’t mandate people use one tool if you can manage multiple in the learning and experimentation phase.

- Stay curious but skeptical: Be open to potential while maintaining healthy skepticism about limitations. If it sounds too good to be true ... you know the rest.

But teams can’t do this alone. It’s not their job to negotiate enterprise contracts, establish data governance, or implement security controls. That’s where you come in.

Organizational enablement means:

- Negotiating contracts that won’t bankrupt you

- Implementing security controls

- Providing training on both opportunities and risks

- Creating policies that enable rather than restrict

But remember: enablement only works with proper development practices already established. If you’ve been postponing them, you’re in a tough spot: you need AI tools to remain competitive but lack the infrastructure to use them safely.

And there’s no shortcut here. Sorry.

Where we’re headed

Engineering roles will transform significantly over the next 1-2 years, and I suspect we’ll see major changes within 6-9 months.

However, nobody knows the full scope of what’s coming. Anyone who claims otherwise is selling something.

And here you are, trying to make strategic decisions while the ground shifts beneath your feet. It’s uncomfortable. It’s uncertain. It’s also where we all are right now.

So what should we do?

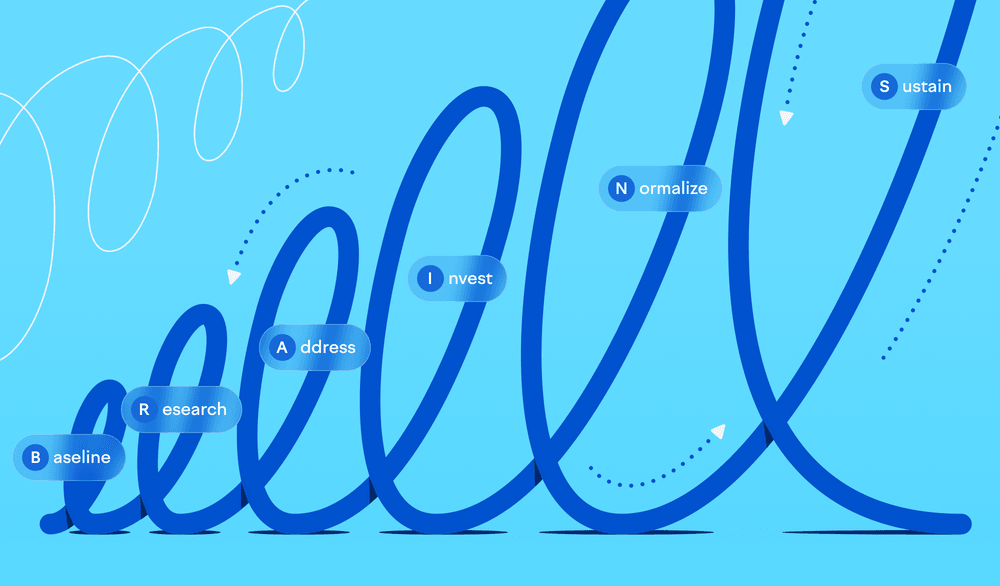

First, shake off some of the hype and remind yourself that none of this changes the fundamentals. To build an effective engineering organization, you still need to cover your three pillars, focus on team delivery capabilities, and maintain disciplined development practices.

Remember our thought experiment? It shows us that while AI is powerful, it’s not magic — and it amplifies what you already have, good or bad.

So keep experimenting, learning, and dare I say it — having fun with it.

And maybe, just maybe, you’ll figure out how to code faster and ship more.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia