Complementing engineering metrics with developer experience surveys

Seeing engineering productivity data for the first time can be an eye-opening experience. You notice a high pull request cycle time, dig deeper into its components, and see that reviews are causing delays. That should be fixed, for sure! But why is it happening? What can you do about it?

The answers often already exist but are scattered around in developers’ heads. Some of them might point out that unclear ownership or siloed codebase knowledge causes the reviews to take a long time. Surveys are brilliant tools for collecting this type of information at scale, showing what’s an isolated incident and what’s a broad pattern.

Yet, the process of gathering perceptions from humans is very different from pulling metrics from systems. Not only do you need high-quality questionnaires, but you have to consider the things around them: You must convince people to put in the time and effort to respond with the right kind of motivation — otherwise, you can’t trust the data. Furthermore, you should recognize human biases like those related to recency, expectations, and adaptation when interpreting the results.

Here’s how to run effective surveys to elevate your understanding of your organization’s developer experience, productivity bottlenecks, and necessary fixes.

Surveys with system metrics: You can have it both ways

Most of your organization’s continuous improvement should take place locally in autonomous teams. Provided with the right information, they can identify, diagnose, and solve many problems on their own. They have the best visibility in their hands-on work, after all. However, some obstacles are big, tangled, or widespread. To identify and address them, you must collect insights and recognize patterns across the organization.

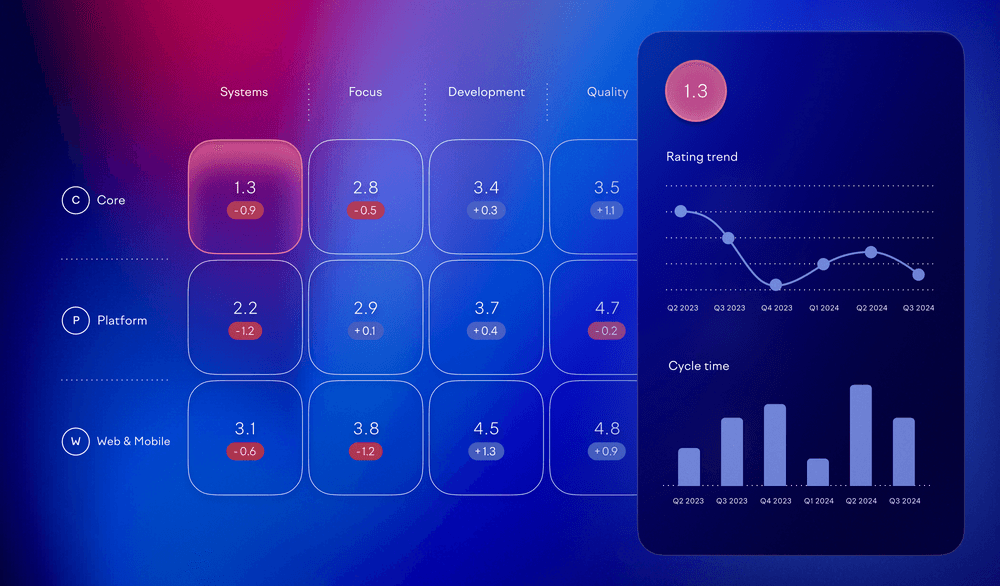

Some of this visibility can be achieved with system metrics gathered from your source code hosting service, issue tracker, CI/CD provider, and so on. They give you that high-level view but have their limits. How do you assess the quality of your documentation, for example? Many hard-to-quantify questions relate to developer experience: how people perceive the development infrastructure, feel about their work, and value it. Despite being more elusive to measure, developer experience can have a sizeable business impact.

Surveys are your tool of choice for thoroughly gathering quantitative and qualitative data about these types of questions. Rating scales provide numbers for comparisons and trends, while open-ended comments enable a more intricate understanding and actionable ideas.

When we ran our first developer survey at Swarmia, we saw how effective they can be at exposing blind spots. All our Swarmia app dashboards showed that we had excellent focus and were building things quickly and predictably. Yet, the survey revealed that none of our teams felt they had enough time to experiment with new ideas. That was a crucial finding because our long-term business relies not only on shipping but also on innovating.

So, since surveys can give a nuanced view of these hard-to-measure dimensions, should you ditch system metrics altogether? Of course not. Surveys have their limitations, which become evident, for example, when asking people to estimate their change lead time with a questionnaire:

- Lack of granularity. A response option “One day to one week” could suggest anything from doing well to having a problem.

- Human bias. People often recall idealized or recent examples instead of representative ones.

- Manual effort. Most developers find routine data input demotivating, especially if it could be automated.

- Infrequent data. Because it’s manual, there are limits to how often you can ask about this.

In this case, automatically collecting the data from your systems is a no-brainer. However, you often get the best benefits by combining surveys with system metrics. As mentioned above, surveys might help you figure out how to reduce those long review delays you noticed in your pull request data. Or the other way around: maybe the survey indicates people feel their focus is spread on too many things, and you can get additional context based on the work-in-progress numbers in your issue tracker.

You can use survey results to some extent to validate whether your actions between surveys result in the desired outcomes. But keep in mind that just because people aren’t complaining about a thing anymore doesn’t mean you solved it. It helps to complement your initiatives with real-time system metrics for a faster feedback loop to track your progress. For example, you can define and measure User Experience Objectives (UXOs) to quantify improvements to your developer tooling and processes.

Surveys come in shapes and sizes

The type of developer survey you should run depends on your needs, and you can run different ones at various cadences and occasions. Here are some examples:

- Overview surveys. Recognize where to focus and where your blind spots are. Get started with our set of 35 questions.

- Drill-down surveys. Get an in-depth understanding of a narrow topic, like code reviews.

- Pulse surveys. Analyze trends in overall sentiment with short, frequent surveys.

- Paper cut surveys. Allow people to report their frustrations right when they encounter them.

- Trigger-based surveys. Launch contextual surveys at specific events, like when someone uses an internal tool for the first time.

Overview surveys are probably the best way to get started, and you may want to run them every three to six months. If you identify particular problem areas, you can find out more with an ad hoc drill-down survey. Some teams run pulse surveys to quickly notice if something needs attention. Their downside is that you might need to spend additional effort to find out what caused a change in sentiment. Paper cut surveys and trigger-based surveys are particularly helpful if you have a platform team that has the time to process that feedback and improve your developer tooling accordingly.

How to lose insights and alienate people

“They run surveys in my company only when they know they’ll get the results they want.”

“Nothing ever changes between surveys. I’m tired of giving the same feedback every time.”

“The leadership discourages people from speaking up. There’s nothing in it for me to tell how bad things really are.”

There are numerous ways to botch even a well-designed developer survey. These blunders come in many forms, but most share a trait: distrust that the responses will be used constructively. Top-notch questions and scales won’t save you if people feel their answers don’t matter or work against them (or their colleagues).

Low response rates can be a symptom of this. If less than 80% of people answer your surveys, you might want to reflect on whether you have a problem with incentives, privacy, or purpose. The lower the response rate, the less representative and more biased the results are. The other possible consequence of a lack of trust is even worse. If people answer untruthfully, not only does it hide problems, but it might be very difficult to notice that based on the results alone.

Unhealthy incentives

Gaming survey data is easy, so make sure there’s no reason to do so. People won’t answer honestly if you:

- reward or punish based on the results,

- stack-rank teams or pit them against each other in any way,

- evaluate manager performance based on team health, or

- try to identify and assess individual people.

Insufficient, excessive, or unclear privacy

If your organization is low on trust or you explore particularly sensitive topics, you might need to resort to anonymous surveys to make respondents feel safe to answer. But then you have to face the “privacy-utility tradeoff”: the less you know about the respondent, the harder it will be to take action. Usually, you should at least track the results by the team.

Regardless of the privacy level, you should clearly explain how the results will be used. Ambiguity can unnecessarily scare people off from answering truthfully. Misunderstandings leading to incidents could erode trust and compromise your next survey.

Lack of purpose

Psychological safety (or, to put it another way, being a decent human being) is only the first step. People are busy, and surveys are yet another thing on their long to-do lists. If they don’t know what the point of responding is, you can’t really blame them for prioritizing something else. If you run surveys too often or don’t coordinate with your company’s other feedback mechanisms, like employee engagement surveys, you risk unnecessary overlap and not having enough time to improve in between.

You need to demonstrate that you use the survey results to make a positive impact on your organization. Make sure you communicate how exactly the survey helps the business and makes individual respondents’ work more pleasant.

The results are in… Now what?

Okay, so you got an average of 3.8 out of 5 for “Our automated tests catch issues reliably”. Is that good or bad? You have to use your own judgment. Is that better than 3.5 for “I never get blocked by documentation gaps”? Not necessarily, since it’s an apples-to-oranges comparison between questions.

To learn from the numbers, it helps to compare

- ratings between different parts of your organization,

- changes from previous survey results, or

- benchmarks from other companies in the industry.

That means there’s a benefit in using a standardized set of questions and asking the same ones at a regular cadence.

After identifying your problems and focus areas, it’s time to dig deeper. Open comments in surveys usually give you a head start. People facing problems have had the time to think about the root causes and potential solutions, so they often write about those.

Next, who’s responsible for addressing the survey results? That depends on the problem, which means you need both bottom-up and top-down approaches. Individual teams might be best positioned to solve ways of working problems like slow review times. However, wider issues, like tangled organizational structure or unreliable CI, must be solved at a higher level.

To facilitate both discussions, it’s helpful to openly share the results with everyone and empower teams to take the initiative. For example, each team could dedicate one of their retrospectives to analyzing their survey results and planning actions based on them. In addition, you should drive an open, company-wide conversation about larger structural improvements.

Documenting focus areas, defining action points, and openly tracking progress help demonstrate that you care and that surveys are having an effect. It’s useful to get teams to take ownership of their actions and report progress in a way that still allows them to stay in control.

Make sure you allow enough time for your actions to take place before running the next survey. Too frequent surveys are frustrating if people need to report the same problems without seeing improvement.

Tell me something I don’t know

Surveys are a powerful way to uncover insights about your team’s developer experience that metrics alone can’t reveal. By thoughtfully designing them based on your organization’s needs, you can address issues that might otherwise stay hidden.

The goal isn’t just to collect data but to build a culture of continuous improvement where feedback leads to change. After all, a survey is only as valuable as the actions it leads to.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia