Engineering leaders share their wins and challenges in evaluating team performance

Earlier this year, Swarmia and LeadDev partnered with a research firm to understand the metrics that software engineering teams rely on and the bottlenecks they are struggling with.

The work couldn’t have been more well-timed. Right around the time we received the initial data from the research, McKinsey published their already-infamous post claiming that it’s possible to measure developer productivity. The engineering managers and leaders participating in our research shared a much more complex perspective on the topic.

SPACE and DORA gain traction, but uncertainty remains

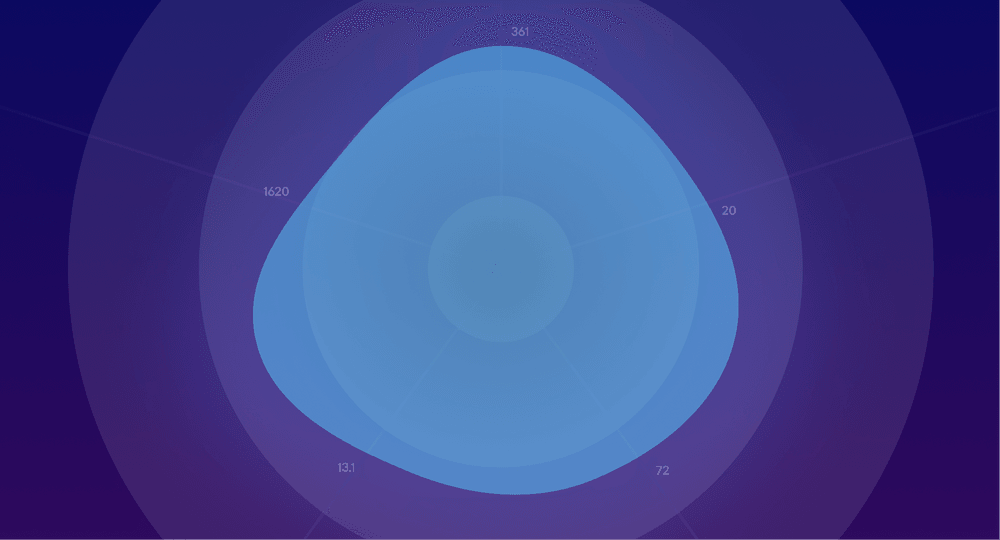

Indeed, we found that while the industry is at least somewhat coalescing around SPACE and DORA as high-level frameworks for understanding productivity, there’s still a ton of uncertainty about the details — 67% of respondents said they had difficulty choosing the right metrics to evaluate their teams’ performance. They’re also seeking more specific and agreed-upon definitions of words like “velocity” and “well-being” — a question neither framework attempts to answer.

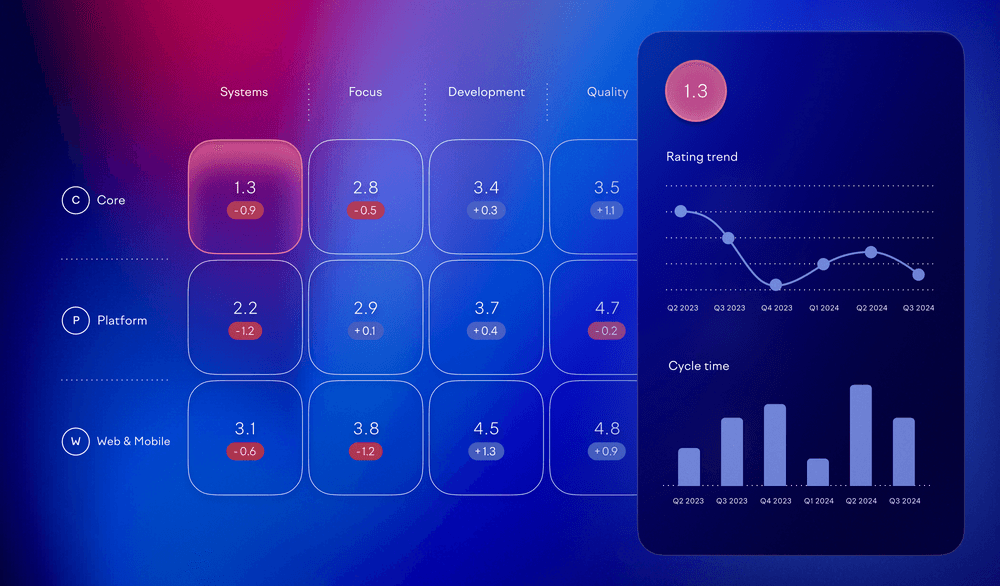

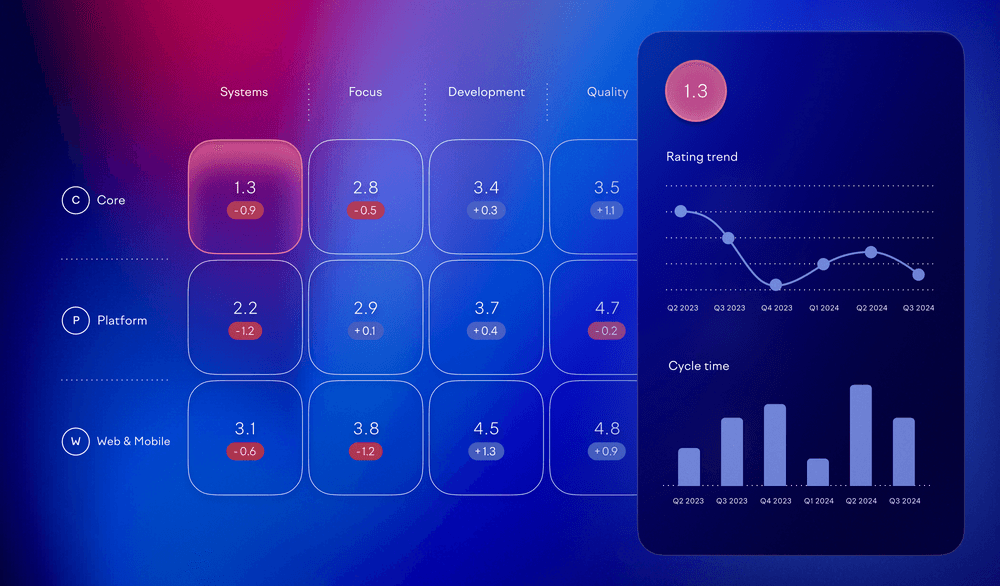

When managers and leaders do choose metrics, cycle time is the leading choice, but there’s a lot of competition among the top 5: lead time, deployment frequency, work-in-progress, and change failure rate also ranked high, with no single metric standing out from the others. This is to be expected: there is no one metric for understanding this fundamentally human space.

A number of those metrics are pulled straight from the DORA framework, which, along with SPACE, are frequent references for us at Swarmia and for the industry as a whole. Among people familiar with the two frameworks, about half said they’d figured out how to make them either effective or very effective. Only a small percentage of people found them to be entirely ineffective. Still, a surprising number of respondents weren’t familiar with either of the frameworks: 29% were unfamiliar with DORA, and 55% were unfamiliar with SPACE.

Muddy priorities and limited staffing are key bottlenecks

Teams are craving clearer priorities and more staffing: these two challenges accounted for 53% of responses when we asked about the main source of engineering bottlenecks. Still, 88% of respondents said they had a good or complete understanding of strategic business goals. Clearly there’s something missing between “understanding” and “clarity.” Perhaps business-level priorities don’t translate well to engineering work, or perhaps other “priorities” compete when the rubber meets the road.

The post-ZIRP (zero-interest rate policy) upheaval is real and ongoing and has perhaps overshadowed more perennial concerns: working with other teams (12%) and documentation (3%) were cited much less frequently as bottlenecks.

Healthy intent, and an openness to measurement

While the McKinsey report certainly ruffled plenty of feathers, there’s evidence in the survey results that teams are maybe more open to this kind of measurement than leaders might expect: just 20% of respondents raised this as a key challenge. Perhaps that reflects the fact that just 6% of respondents wanted to use team performance metrics for promotion and firing decisions. Most respondents said their efforts are focused instead on identifying process bottlenecks and opportunities to improve.

Get the report

There’s lots more to dig in to to help inform your own engineering effectiveness journey. You can download the report directly below.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia