Introducing software engineering metrics to your organization

There’s increasing demand — from both senior engineering leaders and non-technical executives — to take some objective measurement of software engineering processes. Choosing the right measurements can pave the path to continuous improvement; choosing the wrong metrics can create unintended incentives. Of course, there’s always the risk of overemphasizing easily quantifiable factors at the expense of equally important qualitative aspects.

The increasing demand for more objective measures comes in many forms:

- Identifying inefficiencies and bottlenecks. Metrics can reveal issues in the development pipeline that might otherwise go unnoticed or be misunderstood. For example, tracking cycle time can highlight delays in the code review process or testing phases that weren’t apparent through anecdotal evidence alone.

- Informing resource allocation. With concrete data on where time and effort are spent, engineering leaders can make more informed decisions about where to invest resources. This might mean reallocating team members, investing in new tools, or prioritizing technical debt reduction.

- Demonstrating value to stakeholders. Metrics provide a common language for communicating the value and progress of engineering work to non-technical stakeholders. This is particularly necessary as software becomes increasingly central to business operations across industries.

- Driving continuous improvement. By establishing baselines and tracking progress over time, teams can set meaningful goals and measure their improvement. This creates a culture of ongoing optimization rather than sporadic, reactive changes.

- Enhancing predictability. When properly understood and applied, metrics can improve the accuracy of project estimations and help manage expectations around delivery timelines.

This post explores the importance of engineering metrics, discusses which metrics to focus on (and which to avoid), explores common ways to go wrong with metrics, and examines how to use metrics effectively to drive improvement.

Which metrics?

While no single set of metrics can capture the full complexity of software engineering, several categories of metrics have emerged as valuable tools for measurement and improvement.

DORA (DevOps Research and Assessment) metrics have become widely adopted to assess software delivery performance. These metrics include deployment frequency, lead time for changes, mean time to recovery, and change failure rate. Together, they provide a high-level view of how quickly and reliably an organization can deliver software changes.

Code quality metrics aim to measure aspects of the codebase itself. Common code quality metrics include cyclomatic complexity, code duplication, test coverage, and static analysis results. These metrics can highlight potential maintainability issues or areas that may be prone to defects.

Process efficiency metrics focus on the flow of work through the development pipeline. Cycle time and throughput metrics help identify bottlenecks and improve overall process efficiency.

You can also use metrics to quantify where your engineering time is going, and to evaluate whether the work getting done is having the desired outcome for the business. These types of business metrics go to the heart of understanding how effective an engineering organization is.

First, start measuring

Take stock of existing processes and performance levels before introducing any new measurement systems. By documenting the status quo, you’ll have a clear reference point against which to measure future improvements.

Once you’ve established your baseline, the next step is setting up automated data collection systems: beware of spreadsheets that humans have to fill out. Data accuracy and consistency is paramount. This involves implementing validation checks, establishing clear definitions for each metric, and regularly auditing the data to identify and correct any discrepancies. Without reliable data, the insights derived from your metrics will be of limited value or, worse, misleading.

It’s important to make metrics visible and accessible to all relevant stakeholders. This could involve creating dashboards, regular reports, or integrating metrics displays into existing project management tools. The goal is to make the data easily understandable and readily available to those who need it. This transparency not only facilitates data-driven decision making but also promotes accountability across the team.

No engineering metrics should be tracked at a leadership level that aren’t also visible to teams and individuals; any other approach will lead to untrustworthy data and can cause engineers to fear what is being measured. Team members need to understand what is being measured, why it matters, and how their work impacts these metrics.

Integrating metrics into existing processes and tools is essential for long-term success. This might involve incorporating metric reviews into retrospectives, using metrics to inform project planning, or factoring metric performance into team and individual evaluations. Make metrics a natural part of how work gets done, rather than an additional burden or afterthought.

As you’re rolling out a metrics program, keep in mind:

- Select metrics that align with team and organizational goals.

- Use metrics for insight and improvement, not punishment.

- Reassess the relevance and impact of chosen metrics on a regular cadence.

- Combine quantitative data with qualitative insights (for example, developer experience surveys) for a holistic view.

The most successful implementations of engineering metrics start small, focus on a few areas of improvement, involve software engineering teams directly, and evolve over time based on the team’s needs and learnings.

Encourage team-level ownership of metrics and improvement initiatives. When team members feel a sense of ownership over the metrics and the improvement process, they’re more likely to actively drive change. This might involve assigning metric champions responsible for tracking and reporting specific metrics or creating cross-functional improvement teams to tackle particular challenges. Empower your team to propose and lead improvement initiatives based on their insights from the metrics.

When setting goals, consider your baseline measurements, industry benchmarks for software engineering metrics, and your organization’s specific challenges and constraints. Focus on steady, incremental improvements rather than dramatic overhauls. Be sensitive to team-specific circumstances that could make improvement easier or more difficult.

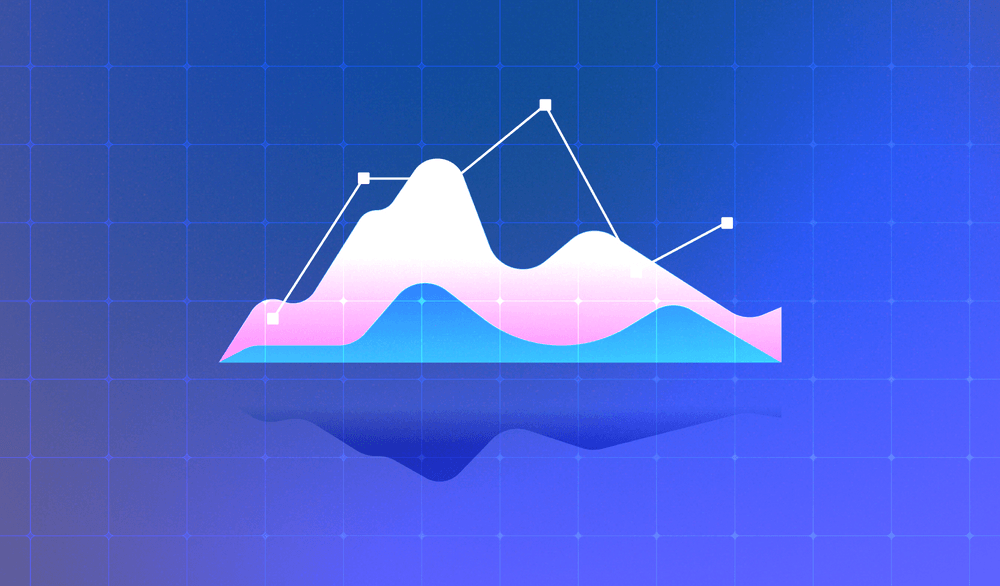

Establish a cadence for examining your metrics: weekly or bi-weekly at the team level, and monthly or quarterly at more senior levels. During these reviews, look for trends, anomalies, and correlations between different metrics. Try to understand the story behind the numbers: what factors are influencing your performance, both positively and negatively? This analysis should inform your decision-making and help you identify areas ripe for improvement.

When you identify an area for potential enhancement, consider running controlled experiments to test your hypotheses. For example, you might test different code review processes or automated testing tools if you’re trying to reduce bug rates. By comparing the metrics before and after these experiments, you can make data-driven decisions about which changes to implement more broadly.

Celebrate successes, and learn from setbacks. When you achieve your improvement goals, take the time to recognize and celebrate these wins. This boosts morale and reinforces the value of your metric-driven approach. Incentivize individual contributors to take the time to fix low-hanging productivity fruit as part of their regular work. When metrics reveal areas where you’re falling short, approach these as learning opportunities rather than failures.

Remember that the ultimate goal of using metrics is not to hit arbitrary targets but to drive real improvements in your software engineering processes and business outcomes. Always keep sight of the bigger picture — how are these metrics and improvements contributing to your overall business objectives and software quality? By maintaining this perspective and consistently applying the above principles, you can leverage your metrics to foster a culture of continuous improvement in your software engineering organization.

Avoid common pitfalls

Metrics can be powerful tools for improving software engineering processes, but they come with potential pitfalls. One common mistake is an overemphasis on numbers. It’s easy to become fixated on hitting specific targets, losing sight of the broader goals those metrics are meant to support. Remember that metrics are indicators, not ends in themselves. They should inform decision-making, not dictate it.

Neglecting context in interpretation is another frequent pitfall. Raw numbers rarely tell the whole story. A sudden drop in productivity might be cause for concern, or it might reflect a necessary investment in training or a team member’s extended absence. Consider the broader context when analyzing your metrics, including team composition, project complexity, and external constraints.

Metrics can sometimes drive unintended behaviors if not carefully managed. For example, emphasizing lines of code written might encourage verbose, inefficient coding practices. Focusing too heavily on bug count could discourage developers from reporting issues. To avoid this, ensure your metrics are balanced and aligned with your objectives. Regularly review the behaviors your metrics are incentivizing and adjust as needed.

Finally, failing to evolve metrics as processes change can lead to stagnation or misalignment. As your team grows, your technology stack evolves, or your business priorities shift, the once-useful metrics may become irrelevant or even counterproductive. Regularly reassess your metrics to ensure they still provide meaningful insights and drive the right behaviors. Don’t be afraid to retire metrics that are no longer serving you well, or to introduce new ones that better reflect your current goals and challenges.

Metrics to avoid

Velocity metrics based on story points or the number of tickets completed are often misused in software development. While these metrics are intended to measure team productivity, they frequently fail to capture the true value and complexity of the work being done.

Story points are inherently subjective and can vary widely between teams or even sprint to sprint within the same team. They don’t account for the quality of work produced or the long-term impact of the changes made. Similarly, tracking the number of tickets completed can incentivize breaking work into smaller, less meaningful chunks or prioritizing easy tasks over more impactful but challenging work.

Tracking these metrics can lead teams to focus on inflating their “velocity” rather than delivering real value. They may rush through tasks without proper consideration for code quality, maintainability, or user needs. This approach can actually harm productivity and quality in the long run.

While all teams need to manage technical debt, it’s challenging to quantify it accurately. Metrics like code churn (the frequency of changes to code) can be misleading indicators of technical debt, and discourage substantial refactoring. High churn might indicate active development and improvement rather than problematic code. Conversely, legacy code with low churn might harbor significant technical debt, though that debt may not be worth fixing.

Instead of relying on such metrics, take a more nuanced approach to assessing technical debt. This could involve regular code reviews, architecture assessments, and discussions with the development team about pain points in the codebase. Qualitative feedback from developers about areas that slow development or increase the risk of bugs can often provide more valuable insights than automated metrics alone.

When considering metrics for software development, focus on outcomes rather than outputs. Metrics that align closely with business goals and user satisfaction, such as feature usage, system reliability, or time to market for new features, often provide more meaningful insights into the effectiveness of development efforts.

There is such a thing as too much

While metrics provide valuable data points, no single metric tells the whole story. The most effective approaches combine multiple metrics and qualitative information to build a holistic view of engineering effectiveness. Still, avoid the temptation to track too many metrics at once. An overabundance of metrics can lead to several pitfalls that ultimately undermine their effectiveness.

Too many metrics can result in information overload. When teams and managers are presented with excessive data points, it becomes challenging to discern which metrics are truly meaningful and actionable. This can lead to analysis paralysis, where the abundance of information hinders rather than facilitates decision-making.

Maintaining and analyzing a large set of metrics requires significant time and resources. This overhead can detract from development work and may not provide commensurate value. Focusing on a smaller set of carefully chosen metrics that align closely with organizational goals and provide clear, actionable insights is often more beneficial.

An excessive focus on metrics can lead to unintended consequences. Teams may choose to prioritize metrics improvements vs. delivering actual value, a phenomenon known as Goodhart’s Law: “When a measure becomes a target, it ceases to be a good measure.” This can result in gaming the system rather than genuine software quality or productivity improvements.

Instead of tracking every metric, organizations should focus on selecting a small set of key performance indicators that provide a balanced view of their software development process. These metrics should be carefully chosen to reflect the organization’s specific goals and challenges. Regularly reviewing and adjusting these metrics ensure they remain relevant and valuable.

Metrics-aware teams can use data more effectively to drive improvements without being overwhelmed or distracted by excessive measurement. This approach allows for a deeper analysis of the most important factors influencing software development performance and outcomes.

A powerful tool for continuous improvement

When used thoughtfully, engineering metrics can be powerful catalysts for improvement in software organizations. They provide invaluable insights into processes, productivity, and quality, enabling continuous improvement and data-driven decision-making.

Remember, though: the goal of metrics isn’t to hit arbitrary targets but to use data to inform decisions that lead to better software and more effective teams. Successful implementation requires careful selection of metrics, robust data collection systems, and a commitment to regular analysis and adjustment. It also demands awareness of potential pitfalls, such as overemphasizing numbers or neglecting context in interpretation.

As you embark on or refine your metrics journey, focus on the ultimate objectives and remain open to course corrections. With the right approach, metrics can be transformative, driving positive change and fostering a culture of continuous improvement in software engineering.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia