Measuring the productivity impact of AI coding tools: A practical guide for engineering leaders

Everyone’s excited about the potential of GenAI in software development, and for good reason. Tools like GitHub Copilot, Cursor.ai, and ChatGPT are changing how developers write code. Engineering leaders we work with are seeing real productivity gains, but they also ask an important question: “How do we actually measure the impact?”

It’s a natural question. As engineering leaders, we want to understand the ROI of any new tool or practice. We want evidence that our investments are paying off and insights into optimizing their usage. But measuring the productivity impact of GenAI tools isn’t straightforward.

Through our work with engineering organizations and our research for our book on engineering effectiveness, we’ve developed a practical approach to understanding the productivity impact of these tools in your organization. This post will help you:

- Understand why traditional productivity metrics might miss the mark with GenAI

- Set up meaningful measurements that capture both immediate gains and long-term effects

- Make informed decisions about GenAI tooling based on data rather than hype

The key is to move beyond simplistic metrics and vendor claims. Yes, GitHub reports a 55% productivity increase with Copilot in certain tasks. But real-world impact will vary significantly based on your team’s context, codebase, and how you implement these tools.

Understanding the complexity

Measuring GenAI’s impact on developer productivity might seem straightforward — just track velocity or lines of code before and after adoption, right? The reality is more nuanced.

Starting without a baseline

Most engineering organizations haven’t established a clear productivity baseline. According to Gartner, only about 5% of companies currently use software engineering intelligence tools, though this is expected to grow to 70% in the coming years.

This means many teams are trying to measure the impact of GenAI tools without first understanding their “normal” productivity patterns.

The multi-tool reality

While tools like GitHub Copilot offer usage analytics, they only tell part of the story. Developers typically use a mix of AI tools — Copilot or Cursor in their IDE and ChatGPT or Claude in their browser.

Someone might only use a “smart autocomplete,” while someone else automates a tedious manual task by using the models on the command line or with an agentic mode.

This fragmented usage makes it challenging to get a complete picture of AI’s impact.

The metrics interaction problem

Engineering metrics don’t exist in isolation. Standard measurements like cycle time, batch size, and throughput influence each other in complex ways. For instance, if GenAI helps developers write code faster, they might create larger pull requests, which could actually slow down the review process and increase cycle time.

Larger changes might even lower the quality of the reviews and, thus, the quality of the codebase.

Self-selection bias in early adoption

There’s an important consideration around who adopts these tools first. Early adopters in your team are often already high performers who actively seek ways to improve their productivity. This can skew early measurements and make it harder to predict the impact across your organization.

Code ownership and second-order effects

Perhaps the most challenging aspect is understanding the long-term impact on code quality and team knowledge. While GenAI can accelerate the speed of generating code, it could potentially lead to:

- Decreased codebase understanding if developers rely too heavily on generated code

- Knowledge gaps when team members leave and new developers join

- Accumulated technical debt if generated code isn’t properly reviewed and understood

The ROI calculation challenge

Vendor-provided statistics, like GitHub’s reported 55% productivity increase with Copilot, often come from controlled experiments with specific use cases — like creating a new Express server. While impressive, these numbers don’t necessarily translate to real-world scenarios where developers are maintaining complex codebases or working on domain-specific problems.

The research around the SPACE framework already suggests that there is no single measure of productivity and, thus, no simple definition of return on investment.

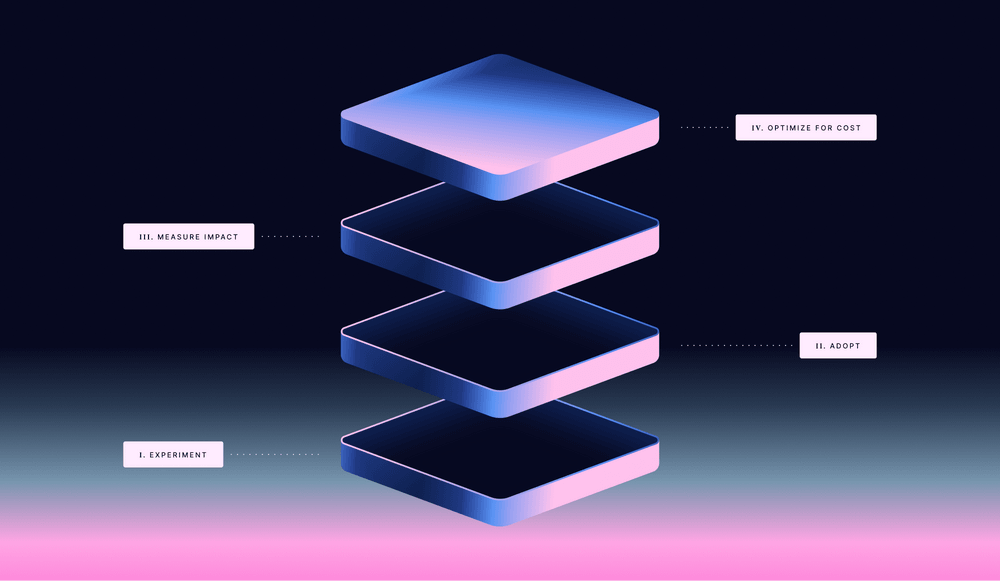

A balanced approach to measurement

Okay, so there’s no one single metric. Here are some dimensions to explore for a comprehensive view of GenAI’s impact on your engineering organization.

Team collaboration and knowledge sharing

One of the key risks of GenAI tools is the potential for knowledge silos — where individual developers generate and ship code without proper team understanding. You can counter this by measuring:

- Pull request collaboration patterns: Track how many team members are involved in each feature or epic, and avoid big projects carried out by a single person

- Review depth: Monitor review thoroughness and discussion quality

- Knowledge distribution: Measure how work is spread across the team

For example, you might adopt a working agreement ensuring multiple team members collaborate on each significant feature. This helps maintain code quality while spreading knowledge organically through the team.

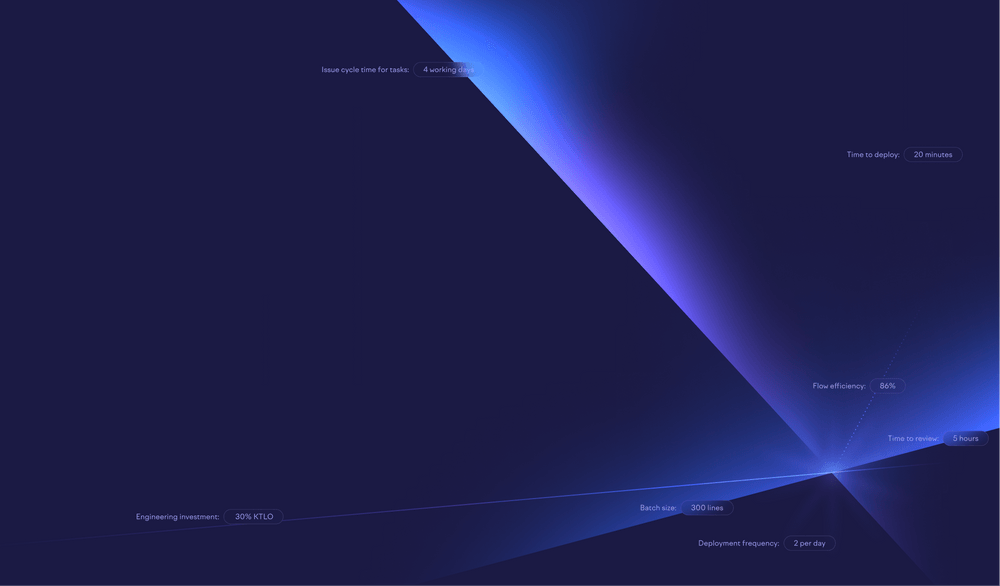

Development process metrics

While speed isn’t everything, it’s important to track how GenAI tools affect your development velocity:

- Cycle time: Track the full development lifecycle, from first commit to production

- Time in different stages: Pay special attention to coding time vs. review time

- Throughput: Monitor the rate of completed work items

You can try segmenting this data between AI-assisted and non-AI-assisted work. For instance, you might compare cycle times for pull requests where Copilot was heavily used versus those where it wasn’t.

Batch size monitoring

AI tools make it easier to write more code quickly, which can lead to increased batch sizes. Track pull request sizes and encourage smaller, more digestible changes. Large batches often lead to delayed code reviews and lower review quality.

Code quality indicators

Quality often suffers when teams focus solely on speed. Track these indicators to ensure AI tools aren’t creating hidden technical debt:

- Bug backlog trends

- Production incident rates

- Change failure rate and time to recovery

- Proportion of maintenance work vs. new feature development

- Code review feedback patterns

Developer experience and sentiment

Quantitative metrics only tell part of the story. Gather qualitative feedback through:

- Regular developer experience surveys

- Structured annotations on pull requests (for example, labels for changes where GenAI tools had a significant impact)

- Team retrospectives focused on AI tool usage

Consider these specific survey questions:

- “I use AI tools in most of my programming work.”

- “AI tools help me program faster.”

- “I rarely notice AI tools cause quality issues in our team.”

The idea is to look at the overall sentiment and individual experiences separately.

Usage patterns

While tool usage alone doesn’t indicate success, tracking adoption patterns can provide valuable insights:

- Which teams are using AI tools most effectively?

- What types of tasks see the highest AI tool usage?

- Are there patterns in when developers choose not to use AI assistance?

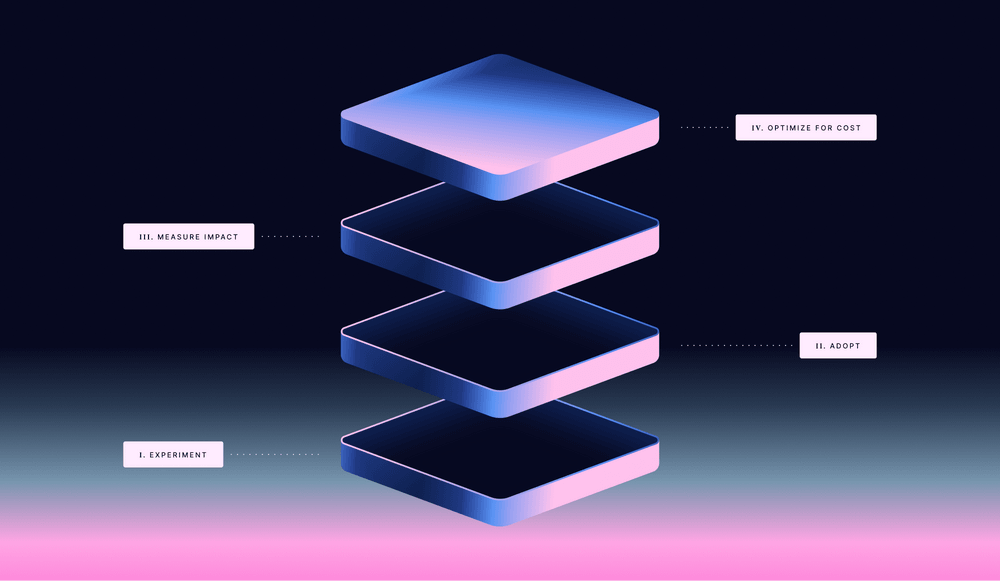

Making it work in practice

Successfully measuring and optimizing GenAI’s impact requires more than just tracking metrics. Here’s how to create an environment where both measurement and adoption can thrive.

Create space for learning

Development teams need dedicated time to experiment with and master these tools. Consider:

- Regular hackathons focused on AI tool exploration

- Internal knowledge-sharing sessions where developers demonstrate effective usage patterns

- Dedicated Slack channels for sharing AI tool tips and success stories

For example, at Swarmia, our bi-annual hackathons have proven valuable for AI tool adoption. Before ChatGPT was available for businesses, our team built a Slack bot for the OpenAI API, which helped everyone learn from each other’s queries and use cases — as all of this happened on a public Slack channel.

Invest in tool configuration

Many tools can read codebase documentation in plain English and use it to understand which files should be edited. Investing in this documentation can also benefit the humans working on the system — AI might have finally made documentation cool again.

It’s important to have good defaults for the development environments, but it’s equally important to ensure everyone tries these tools at least once. This way, they’ve overcome the setup hurdles, making incremental adoption possible.

Look for high-leverage projects

Completing projects that were otherwise considered too laborious can yield outsized returns from GenAI tools.

If you're in the middle of a big migration project from one framework to another, try using agentic tools to help with the shoveling. The cognitive load of being between two frameworks is likely significant, and eliminating a category of technical debt can be a significant productivity boost.

Embrace incremental adoption and developer choice

Allow teams to find their own path to effective AI tool usage. Some developers and tasks will benefit more than others, and that’s okay. Regularly reassess your approach as AI capabilities evolve — what wasn’t effective six months ago might be transformative today. Trust your teams to make good decisions about where and when to leverage AI assistance.

That said, after a negative experience, check back after 3-6 months. This industry is moving faster than anything we’ve seen before.

Make progress visible

Without transparency, people not using these tools might assume that others are not using them either. Share success stories and learnings across teams to inspire and educate. Track and communicate adoption metrics in a way that emphasizes learning rather than comparison. Celebrate innovative uses of AI tools that advance your organization’s goals.

We organized an internal event that we named “AI Festival.”

Address common concerns

Be proactive about addressing team anxieties:

- Establish clear guidelines for code review of AI-generated content

- Create policies around AI tool usage with sensitive code

- Be transparent about how metrics will (and won’t) be used

- Focus on team-level improvements rather than individual performance

In summary

Measuring GenAI’s impact on developer productivity isn’t about finding a single magic metric. Instead of chasing vendor-quoted numbers, focus on a balanced set of indicators: collaboration patterns, cycle time, code quality, and developer experience. Success comes from creating an environment where teams can experiment, learn, and adapt — while maintaining the engineering practices that make great software possible.

The tools are promising, but it’s the thoughtful implementation that turns promise into real results.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia