Well-researched advice on software team productivity

Understanding how individual people and productive teams behave is crucial for improving team performance. Often, it can be hard to distinguish ideological advice from insights grounded in research. Here's a list of four reliable resources every software leader should know.

During my tech career, I've met a multitude of idealistic people who believe following idea X or methodology Y will somehow magically transform a team or an organization. In reality, if you want to make software teams more productive, there are no silver bullets.

Every software organization is different. People come from different cultures and circumstances. They have varying levels of experience, motivations, and personalities.

The only real way forward is a relentless process of incremental improvement:

1. Identify bottlenecks

2. Experiment with a possible solution

3. Measure

4. Analyze

5. Repeat

It’s simple advice but hard to put into practice. One of the biggest challenges in this process of continuous improvement is identifying what you should measure and what kind of behavior you should foster.

The four resources in this article will give you reliable advice that will help you make meaningful improvements in your organization.

Project Aristotle: Understanding team effectiveness

If you've heard that "psychological safety" is the most important factor for creating productive teams, you've heard about Project Aristotle. Project Aristotle is Google's most well-known and quoted research on what makes a team effective.

The main finding of this research: How teams work together is more important than who is on the team.

The five key dynamics that affect team effectiveness, in order of priority, are:

- Psychological safety: Team members feel safe to take risks and be vulnerable in front of each other.

- Dependability: Team members get things done on time with the expected level of quality.

- Structure and clarity: Team members have clear roles, plans, and goals.

- Meaning: Work is personally important to team members.

- Impact: Team members think their work matters and creates change.

The researchers have also provided a list of improvement indicators for each key dynamic. These are signs that your team needs to improve a particular dynamic:

- Psychological safety: Fear of asking for or giving constructive feedback. Hesitance around expressing divergent ideas and asking "silly" questions.

- Dependability: Team has poor visibility into project priorities or progress. Diffusion of responsibility and no clear owners for tasks or problems.

- Structure and clarity: Lack of clarity about who is responsible for what. Unclear decision-making process, owners, or rationale.

- Meaning: Work assignments based solely on ability, expertise, and workload. There's little consideration for individual development needs and interests. Lack of regular recognition for achievements or milestones.

- Impact: Framing work as "treading water." Too many goals, limiting the ability to make meaningful progress.

To understand how your team feels about these dynamics, you can use this tool. It gives you questions that you can discuss together, which will help your team determine their own needs and commit to making improvements.

DORA: Four key performance metrics

The DevOps Research and Assessment (DORA) team is a research group that started independently in 2014 and has been part of Google since 2019. They're known for the annual "State of DevOps" reports.

The DORA team has conducted seven annual global surveys between 2014–2021 that have collected data from more than 32,000 software professionals. From this research, the DORA team has identified four key performance metrics that indicate the performance of a software development team and their ability to provide better business outcomes.

These four DORA metrics are:

- Deployment frequency: How often an organization deploys to production.

- Lead time for change: The time it takes to get committed code to run in production.

- Change failure rate: The percentage of production deployments leading to degraded service and remediation (e.g., rollback).

- Time to restore service: How long it takes to fix a failure in production.

The first two metrics, deployment frequency and change lead time, measure the velocity of your engineering teams. The second two, mean time to recover and change failure rate, indicate stability. One of the main points of the DORA research is that successful teams can improve their velocity while still achieving great stability.

The four DORA metrics are the most popular and well-known part of this research. However, it includes many other important insights you should be aware of when applying the metrics. For example, the study lists 24 key capabilities that correlate with team performance.

The research methodology and results of the State of DevOps are explained in detail in the book Accelerate. It is a must-read for anyone interested in improving engineering team productivity.

While the DORA metrics are very useful, you should not take them as the one and only measure for improving engineering. The research is more focused on identifying things that correlate with a company's financial performance rather than being a roadmap for improving engineering.

SPACE: A framework for developer productivity

SPACE is a framework for developer productivity. It looks at developer productivity from the perspective of different organizational levels (individuals, teams & groups, and systems) and dimensions (Satisfaction & Well-being, Performance, Activity, Communication & Collaboration, and Efficiency & Flow).

A member of the DORA team and author of the book Accelerate, Nicole Forsgren, led this study. The goal of SPACE is to give a more complete and nuanced picture of developer productivity beyond the specific metrics included in DORA.

The SPACE framework aims to overcome the flaws of earlier attempts to measure developer productivity. These "myths and misconceptions about developer productivity" include:

- Productivity is all about developer activity.

- Productivity is only about individual performance.

- One productivity metric can tell us everything.

- Productivity measures are useful only for managers.

- Productivity is only about engineering systems and developer tools.

To counteract these myths, the SPACE framework "provides a way to think rationally about productivity in a much bigger space." It lists five different dimensions of developer productivity you should measure instead:

- Satisfaction and well-being: "Satisfaction is how fulfilled developers feel with their work, team, tools, or culture; well-being is how healthy and happy they are, and how their work impacts it." Example metrics include employee satisfaction, developer efficacy, and burnout.

- Performance: Trying to measure performance through outputs often leads to suboptimal business outcomes. Therefore, for software development, performance is best evaluated as outcomes instead of output. Example metrics include reliability, absence of bugs, and customer adoption.

- Activity: Countable activities that happen when performing work, such as design documents, commits, and incident mitigation, are almost impossible to measure comprehensively. Therefore, you should never use them in isolation and instead always balance them with metrics from other dimensions.

- Communication and collaboration: How well do teams communicate and work together? Example metrics include discoverability of documentation, quality of reviews, and onboarding times for new team members.

- Efficiency and flow: These "capture the ability to complete work or make progress on it with minimal interruptions or delays." Example metrics include the number of handoffs in a process, perceived ability to stay in flow, and time measures through the system (total time, value-added time, and wait time).

For a fuller picture of what is happening in your organization, the SPACE framework suggests that you have metrics for these dimensions on three different levels of your organization:

- Individual

- Team or group: People who work together.

- System: End-to-end work through a system, e.g., the development pipeline of your organization from design to production.

The SPACE framework authors provide an example table with metrics for each dimension and organization level. It gives you a concrete idea of what metrics you could have in your organization. The metrics proposed in the example are quite GitHub-centric. This is natural as the SPACE framework researchers mostly work at GitHub and Microsoft (owner of GitHub).

The example table brings us to the biggest strength and challenge of the SPACE framework: You will have to make it your own. There are multiple options for what you could use as a metric in your organization, and the metrics you choose depend on what fits your culture and the data you have available.

There are three things to keep in mind with SPACE. First, you should include metrics from at least three dimensions. Second, you should include metrics from all levels of the organization. Third, at least one of the metrics should include perceptual measures such as survey data. Including perceptions about people's lived experiences can often provide more complete and accurate information than metrics gathered from system behavior alone.

When the above recommendations are fulfilled, you will be able to construct a more complete picture of productivity.

It is also likely that having multiple metrics will create metrics in tension. This is by design. Having a more balanced view should help you make smarter decisions and tradeoffs among your teams and the whole organization. It will also make sure you're not optimizing a single metric to the detriment of the entire system.

If you want to start applying the SPACE framework to your organization, you can take a look at our free editable canvas (PDF, Google Docs).

Retrospectives: We may be lying to ourselves

Software organizations often use retrospectives as a mechanism for continuous improvement. Many people have inherited this practice from some vein of agile thinking. Some hold them because they've personally had some good experiences with them, others because they feel that "they are a best practice."

Retrospectives can be an excellent tool for continuous improvement, and you should probably use them in some form if you're already not doing so. However, know that retrospectives have their limits. This study on retrospectives does a great job of highlighting some of the common challenges that you're likely to encounter.

First, team-level retrospectives are often "weak at recognizing issues and successes related to the process areas that are external to the concerns of the teams." Software teams often talk—or complain—about organization-level issues in retrospectives: collaboration with sales, product managers, general management, etc. However, this discussion rarely results in any tangible changes in the broader organization.

If you want to hold effective retrospectives and drive change, mainly focus on topics that the team can control or directly affect. Whenever the team regularly discusses subjects outside of their direct influence, make sure the feedback is addressed on the level of the organization, where real change can be created.

Alternatively, your team's understanding of the surrounding business and organization's goals may be incorrect. In these cases, aim to provide the team with more context.

Second, software team members have biases. For example, "the development teams tend to find people factors as more positive and avoid stating themselves as a target for improvement." The study also lists situations where the way the team felt did not correspond with the real-world evidence on what had happened during the past few weeks.

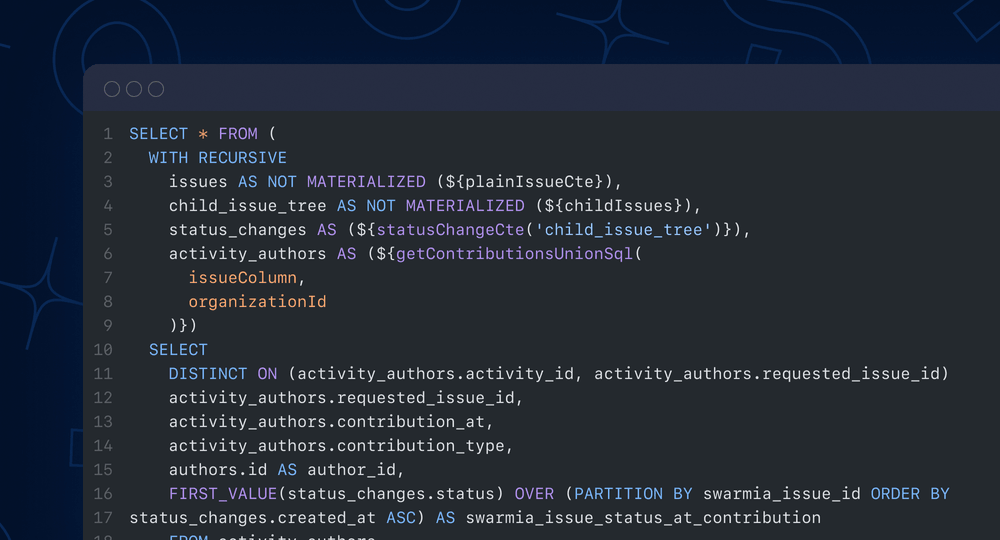

To counteract biases, don’t rely purely on the personal experiences of the team members. Instead, include "fact-based evidence to the retrospective in order to base the analysis on a more objective picture of the real situation." This study proposes the use of a visual timeline of project events collected from various sources. There are many ways you could do this with your team. You can check out Swarmia's work log as an example.

Godspeed!

I believe understanding the principles and mechanisms above will help you improve your software team's performance. This research also drives our product development at Swarmia. We've seen teams following this advice cut their cycle time by 40% within two months.

Swarmia condenses much of the above information into a single tool that is designed to give you easy access to all the key metrics and help you continuously improve. If you're interested in seeing what it looks like and how it might help your team, click on the green button below.

Subscribe to our newsletter

Get the latest product updates and #goodreads delivered to your inbox once a month.

More content from Swarmia